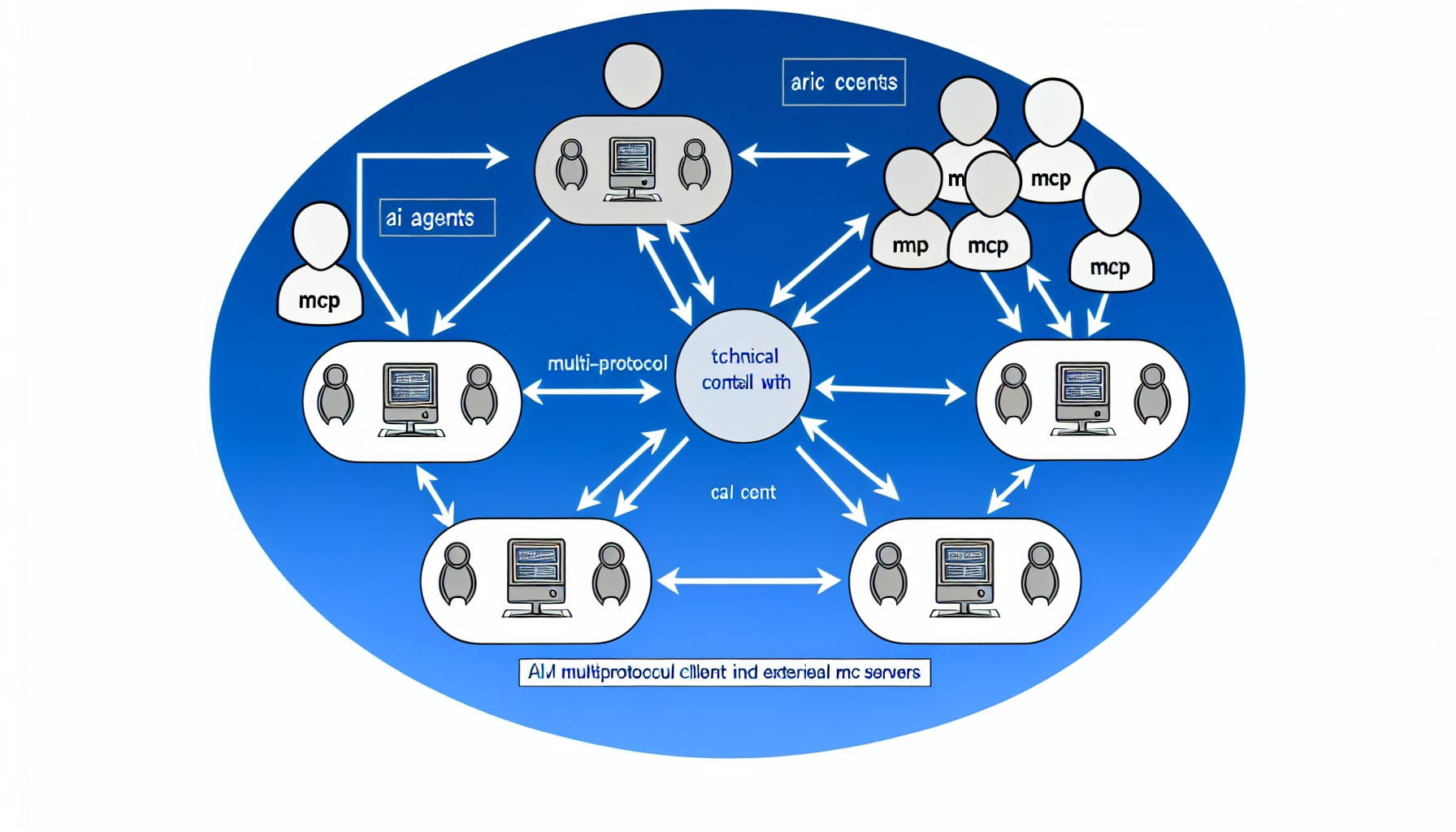

AI agents are becoming incredibly capable, but they often operate in a bubble. Without a way to connect to the outside world, they can’t access real-time information, interact with your local files, or use external services. This isolation severely limits their practical use. The Model Context Protocol (MCP) was created to solve this very problem. It acts as a universal standard—think of it as a USB-C port for AI—that allows models to communicate with external tools and data sources.

At the heart of this system is the MCP client, the component that lives inside your AI application and initiates these connections. This article will be your guide to understanding, building, and leveraging MCP clients. We’ll explore what they are, examine their technical architecture, walk through practical code examples for creating your own, and survey the growing ecosystem. By the end, you’ll know how to give your AI agents the superpowers they need to become truly useful assistants.

Let’s begin by breaking down what an MCP client is and the crucial role it plays.

Understanding the MCP Client: What It Is and Why It Matters

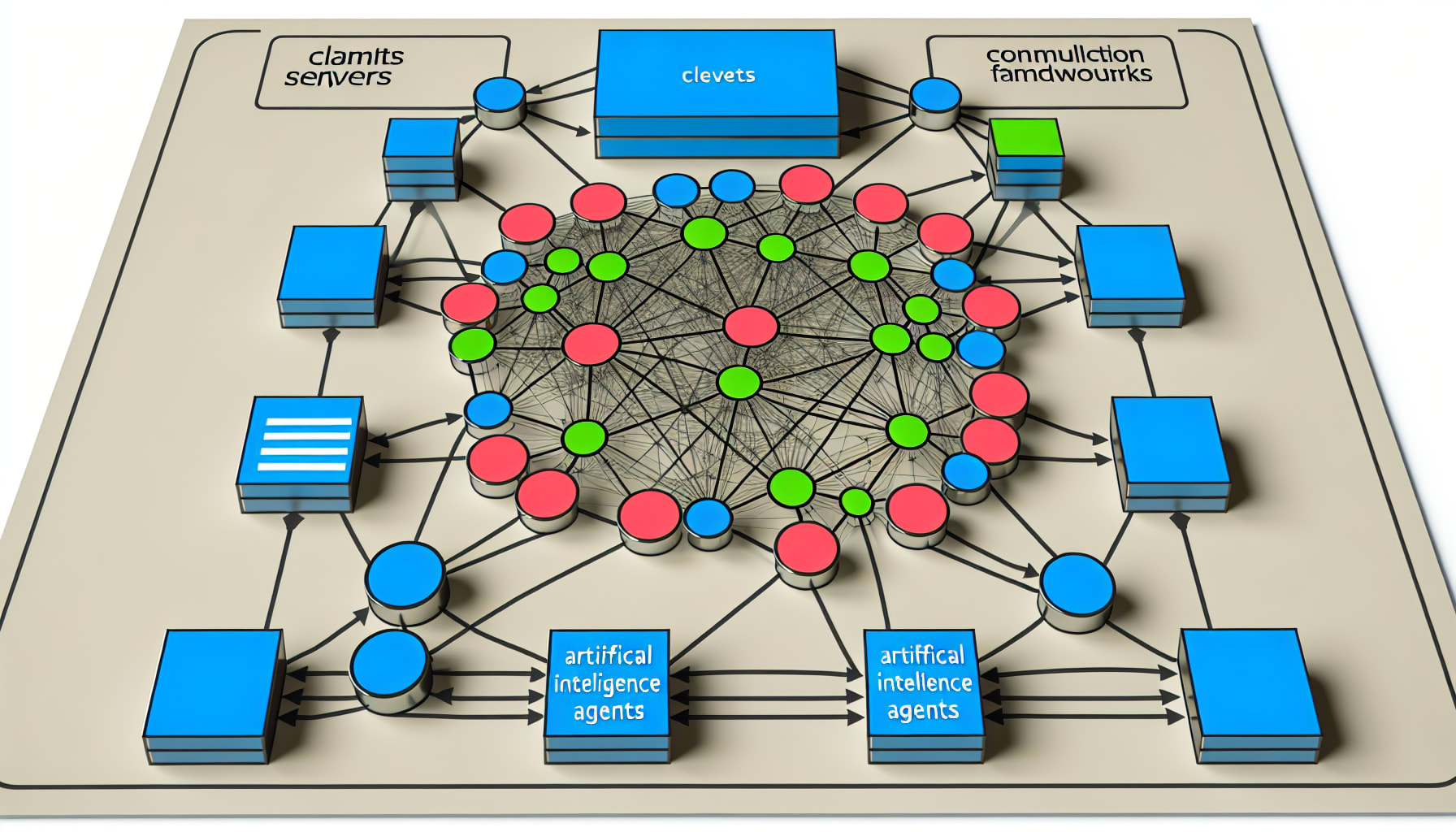

The Model Context Protocol operates on a classic client-server model. The MCP server exposes tools, and the MCP client consumes them. This client is the piece of software that runs on the “host” machine, which could be anything from a desktop application like Claude to a custom Python script running an AI agent. It’s the initiator, the component that reaches out to get the information or action the AI needs.

An MCP client is the component within an AI application that initiates requests to external MCP servers, enabling the AI agent to discover and use tools like web search or database access.

A Brief on the Model Context Protocol (MCP)

Before diving deeper into the client, it’s important to understand the protocol it serves. MCP is an open standard designed to be a universal bridge between AI models and the outside world. Instead of developers building dozens of bespoke, one-off integrations for every tool and every model, MCP provides a single, standardized interface.

Think of Model Context Protocol as a universal intermediate protocol layer between a language model and the outside world. It’s essentially the “USB-C of AI integration” – one plug that connects to many devices.

This standardization means a developer can create an MCP server for a tool like searching a database, and any MCP-compliant client can use it, regardless of whether it’s powering a Claude, GPT, or Llama-based agent. This fosters a reusable and modular ecosystem.

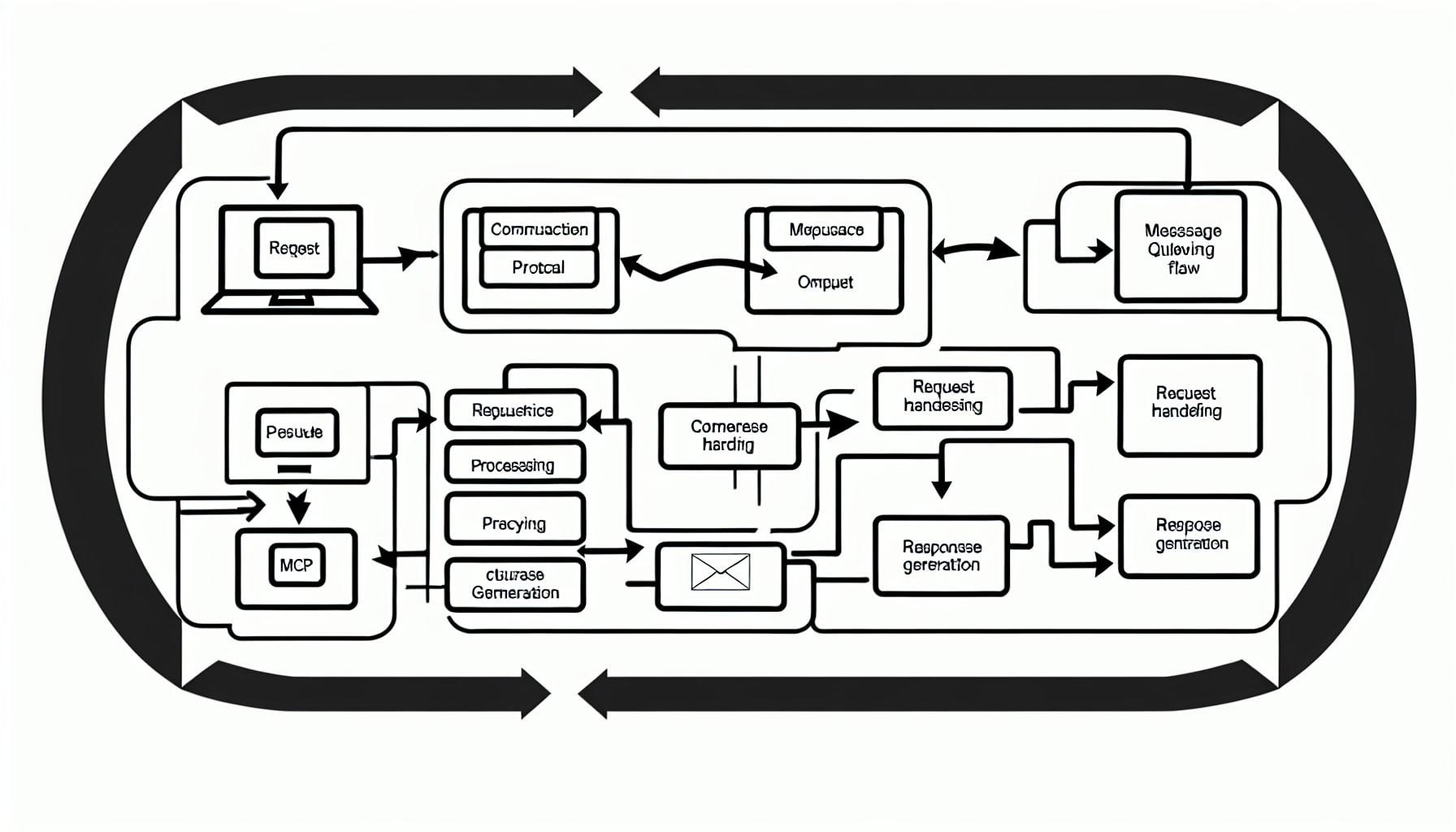

The Client’s Core Responsibilities

The MCP client does more than just blindly send requests. Its role is multifaceted. First, it establishes a persistent connection to an MCP server. Upon connecting, it receives a tool_list message, which it parses to understand what capabilities the server offers. When the AI agent decides to use a tool, the client formats a structured JSON request and sends a tool_call. It then listens for streamed content_chunk responses, assembling them to provide a complete result back to the agent. It also handles errors and knows when the server is done. This orchestration makes the interaction seamless for the agent.

The AI Agent and the MCP Client

The relationship between an AI agent and its MCP client is one of collaboration. The agent provides the intent, and the client handles the execution. For example, an agent might reason, “To answer this question, I need to know the latest news from TechCrunch.” It then passes this goal to the MCP client. The client, knowing it’s connected to a web-scraping MCP server, formulates the correct tool_call request, sends it, processes the response, and hands the clean data back to the agent. The agent doesn’t need to know about HTTP requests or JSON parsing; it just knows it can ask for information and receive it. This separation of concerns is what makes the system so powerful and scalable.

The Technical Architecture: How MCP Clients Work Under the Hood

The design of the MCP client is built on proven web technologies, prioritizing robustness and simplicity. It avoids unnecessary complexity to ensure it can be implemented easily across different platforms and programming languages. Understanding this foundation is key to both using existing clients and building your own.

MCP clients primarily use Server-Sent Events (SSE) over HTTPS for real-time, unidirectional communication, secured by bearer tokens and structured with JSON messages.

Transport Protocols and Message Formats

The most common transport protocol for MCP is Server-Sent Events (SSE) over a standard HTTPS connection. SSE allows a server to push data to a client in real-time over a single, long-lived connection. This is perfect for streaming tool outputs back to an agent without the overhead of traditional polling or the bidirectional complexity of WebSockets. For local communication, such as when an MCP server runs as a command-line tool on the same machine, stdio (Standard Input/Output) is often used.

Regardless of the transport, all communication uses simple JSON objects. Key message types include tool_list (to announce available tools), tool_call (to invoke a tool), and content_chunk (to stream back results). This standardized format, detailed in the official MCP specification, is what makes the protocol universally interoperable.

Authentication and Security Mechanisms

Security is a primary concern when giving AI agents access to external tools. MCP addresses this by mandating HTTPS for encrypted communication and using bearer tokens for authentication. The client must present a secret token, typically a 256-bit string generated by the server’s dashboard, with each request. This ensures that only authorized clients can access the server’s tools.

This design creates a secure sandbox. The agent isn’t executing arbitrary code; it’s making controlled requests to a server that has its own set of permissions and safeguards.

While MCP tools significantly enhance the capabilities of AI agents, they also introduce serious risks if not properly managed. Granting an agent access to tools like file systems, payment processors, or external APIs means it can perform real-world actions with real-world consequences. Without strict controls, validation, and sandboxing, a poorly instructed or malicious agent could delete data, leak sensitive information, or make unauthorized transactions.

Local Sidecar and Multi-Mode Connection

When you use a desktop application like Claude with a local MCP tool (e.g., one that accesses your filesystem), you’re often using a “sidecar” pattern. An application like Claude Desktop can spawn a small, separate process, such as the supergateway sidecar, which runs the MCP server locally. This sidecar manages the connection, often translating from a simple stdio interface to a more complex transport if needed. This pattern is common for enabling local tool execution, as seen in many user setups on platforms like Reddit.

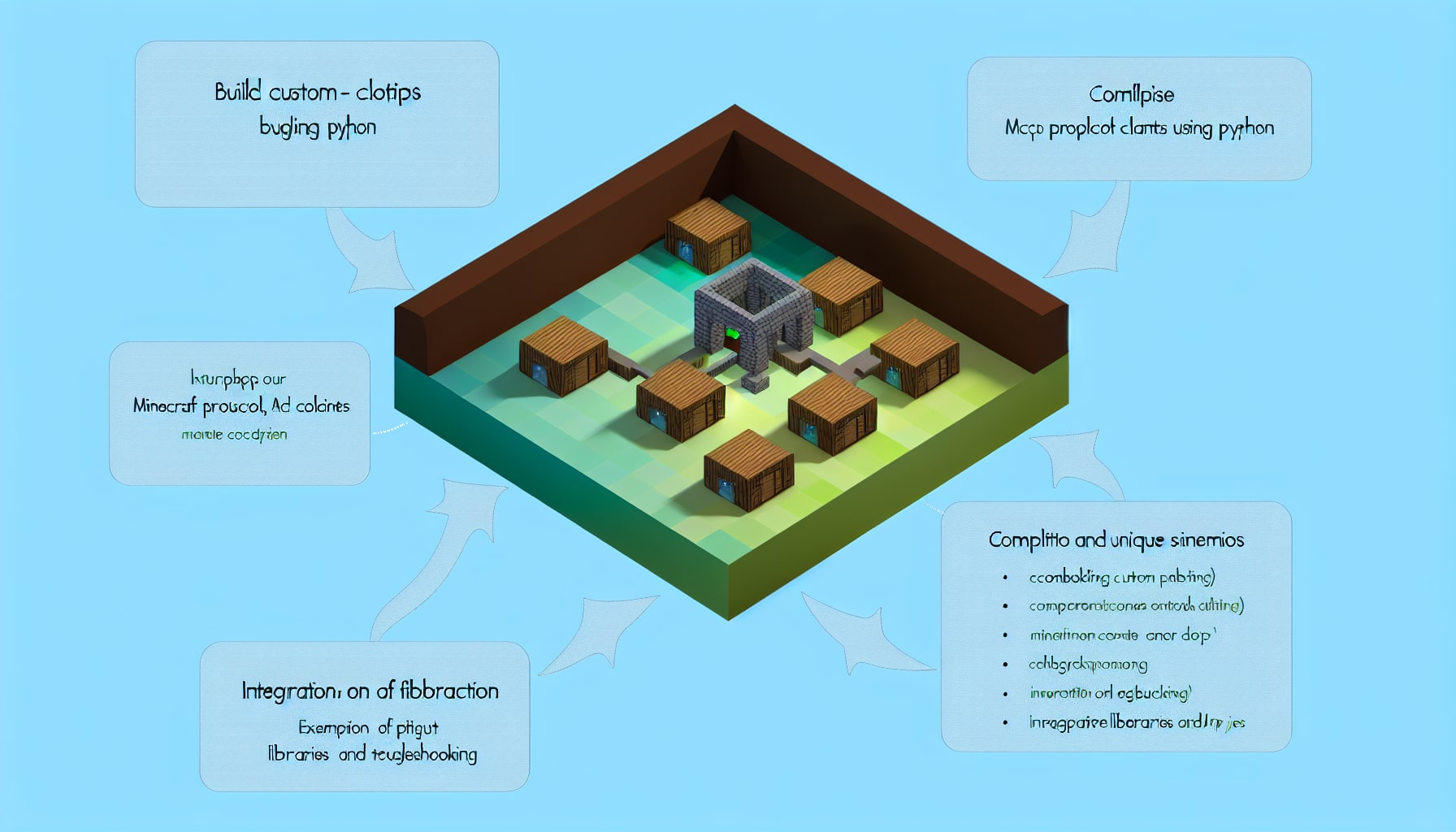

Practical Applications: Building Your Own MCP Client

Understanding the theory is one thing; putting it into practice is another. Thanks to a growing number of open-source libraries, building a custom MCP client to connect your own AI agents to tools is more accessible than ever. This allows you to move beyond pre-packaged applications and create tailored AI solutions.

Using Python libraries like mcp-use, you can create a custom MCP client in just a few lines of code to connect any tool-calling LLM to one or more MCP servers.

Steps for Building a Custom Client

Let’s walk through building a client with the popular Python library mcp-use. The process is straightforward:

- Installation: First, install the necessary packages. You’ll need

mcp-useand a LangChain library for your chosen LLM, likelangchain-openai. - Configuration: Define which MCP servers you want to connect to. This can be done in your Python code as a dictionary or in a separate JSON file. The configuration specifies the command needed to start the server, such as

npx -y @playwright/mcp@latestto run a web-browsing tool. - Initialization: Create an

MCPClientinstance from your configuration. Then, initialize anMCPAgent(which is compatible with LangChain’s tool-calling interface) by passing it your chosen LLM and the client instance. - Execution: Now you can simply run queries. The agent will automatically reason about which tool to use, and the client will handle the communication with the MCP server.

This approach abstracts away the complexities of managing server processes and communication streams, letting you focus on the agent’s logic.

Multi-Server Support and Dynamic Tool Selection

A powerful feature of libraries like mcp-use is the ability to connect to multiple MCP servers at once. You can define servers for web browsing, file operations, and database queries all in the same configuration.

The

mcp_uselibrary is designed to simplify the process of connecting LLMs to MCP servers. With just a few lines of code, you can set up an MCP-capable agent. It supports configuring multiple MCP servers, and agents can dynamically choose the most appropriate server for a given task.

This dynamic selection is crucial for efficiency. Instead of overwhelming the agent with a massive list of tools from all servers, the client can intelligently manage connections, ensuring the agent only considers the most relevant tools for the task at hand.

Typical AI Agent Integration Example

Integrating an MCP client into an agent framework is seamless. For instance, the mcp-use library provides MCPAgent, which is ready to use out of the box. For more custom setups, you can use adapters to convert MCP tools into a format that frameworks like AutoGen (AG2) or CAMEL-AI can natively understand. The AG2 documentation shows how to wrap an MCP session into a toolkit that can be registered directly with an agent, making external tools available just like any other function.

The MCP Client Ecosystem and Future Trends

The adoption of the Model Context Protocol has led to a vibrant and rapidly expanding ecosystem. A wide array of clients, tools, and frameworks have emerged, catering to different needs, from individual developers tinkering on the command line to large enterprises building complex automations. Surveying this landscape helps in choosing the right tools for your project.

The MCP ecosystem is expanding from developer-focused IDEs and CLIs to user-friendly frameworks, with a clear trend towards making tool integration accessible to everyone.

A Look at Mainstream MCP Clients

The client landscape is diverse. For end-users, Claude Desktop provides native MCP support for running local tools. For developers, code editors like Cursor were among the first to integrate MCP, allowing AI to interact with your codebase and development environment. Command-line enthusiasts can use clients like Claude Code or Goose CLI for a lightweight interface. For those building custom applications, Python libraries like mcp-use and frameworks like CAMEL-AI or AG2 provide the building blocks to create bespoke MCP clients tailored to any need.

The Importance of MCP for Tool Extension

MCP’s real power lies in its standardization. By creating a common protocol, it fosters a marketplace of interchangeable tools. Developers can build an MCP server once and have it work with any compliant client. This prevents vendor lock-in and encourages the creation of a rich library of public tools. Hubs like mcpservers.org and community-curated lists like awesome-mcp-servers are becoming central registries for discovering and sharing these tools.

Future Outlook for Agent-MCP Integration

While the current ecosystem is heavily geared towards developers, the future is about accessibility. We are already seeing the emergence of low-code platforms like Make.com that can expose complex automation workflows as simple MCP servers. This trend will continue, bridging the gap for non-technical users.

There’s a MASSIVE gap in the market right now. Claude Desktop is basically your only option [for non-engineers], and even that requires technical knowledge.

This gap identified by market watchers is precisely where the next wave of innovation will occur. Future agent frameworks will likely offer even more seamless, “plug-and-play” MCP integration, making it trivial to equip AI agents with new skills from a vast, interconnected library of tools.

Conclusion

The MCP client is the linchpin that connects the reasoning power of AI agents to the practical capabilities of the real world. It transforms an isolated language model into a functional assistant that can browse the web, analyze your files, and interact with thousands of external services. Built on a simple but robust architecture of SSE and JSON, and now made accessible through powerful libraries like mcp-use, the ability to create and deploy these clients is firmly in the hands of developers.

The ecosystem is maturing at an impressive pace. For anyone building with AI, now is the perfect time to start experimenting. Whether you’re configuring an existing client like Claude Desktop or writing a few lines of Python to build a custom one, you are tapping into a foundational piece of modern AI infrastructure. By connecting your agents to the world, you unlock their true potential to solve real problems.

Tools like this won’t fix everything, but they can make things easier.

Sometimes, getting unstuck is just about removing one small barrier.

If this sounds useful to you, Feel Free to Explore the Tool

Here →